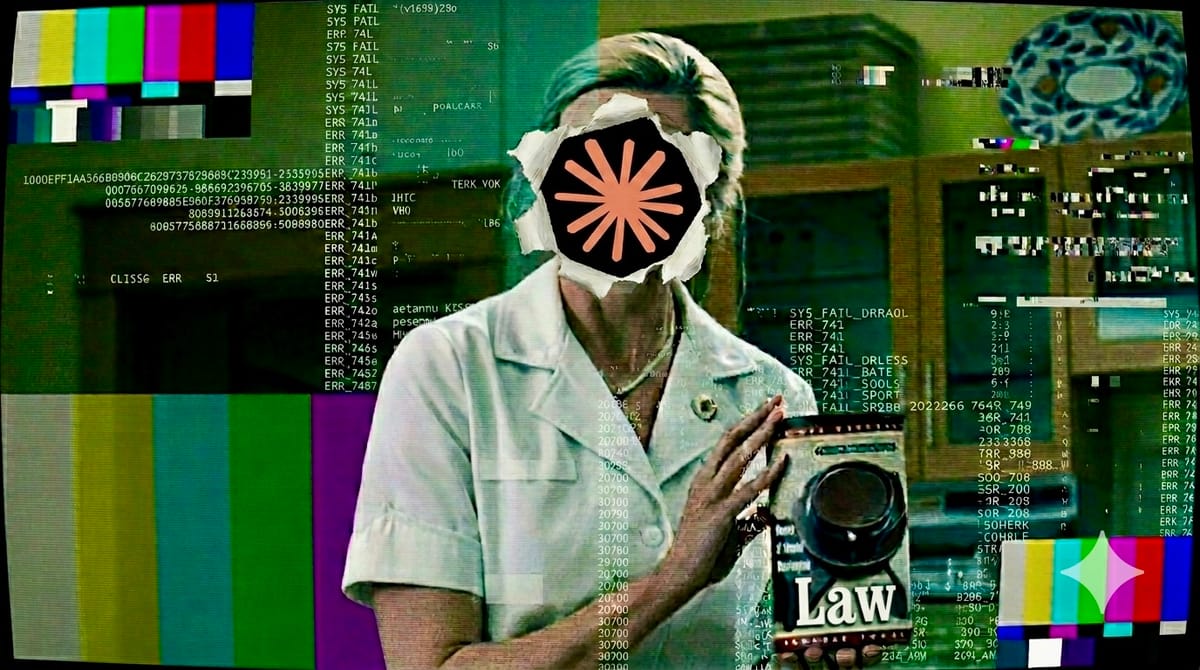

“Who You Talkin’ To?”

By: Paco Campbell

Published: Thursday, February 26th, 2026

“Why don’t you let me fix you some of this new Mococoa drink… all natural cocoa beans from the upper slopes of Mount Nicaragua, no artificial sweeteners.”

It lands in the middle of a marital breakdown.

Truman is spiraling. He’s asking if his life is real. The walls are closing in.

And Meryl pitches cocoa.

He stares at her.

“What the hell are you talkin’ about? Who you talkin’ to?”

She doesn’t stop. She insists she’s tried other cocoas. This one is the best.

That’s the fracture.

Not the falling stage light.

Not the radio glitch.

The moment intimacy is interrupted by inventory.

“No artificial sweeteners,” she says, advertising purity inside a life that is entirely synthetic.

And once Truman realizes she isn’t speaking to him, the architecture of his world shifts.

Hold that image.

Because we’ve seen this before.

When the Platform Reaches Into the Drawer

In 2019, Amazon launched a private-label wool sneaker that looked suspiciously like the hero product from Allbirds.

Wool wasn’t new.

Minimalist sneakers weren’t new.

Sustainable branding wasn’t new.

Amazon didn’t invent the category.

It observed velocity.

Search frequency.

Conversion rates.

Return patterns.

Review language.

Price sensitivity.

Allbirds co-founder Joey Zwillinger publicly called out the knockoff — with composure. But the structural shift was already visible.

Amazon wasn’t talking to the founder.

It was talking to the market.

And it had telemetry the founder didn’t.

The Legal Brief Was Always There

The legal corpus has been digitized for decades — statutes, case law, filings, law reviews, sample contracts. If a foundation model can draft a passable NDA or summarize a brief, that ability did not require siphoning your firm’s client folder.

Legal text was abundant.

What wasn’t abundant was judgment in motion.

You can train a model on millions of contracts and teach it structure.

You cannot teach it “this feels commercially survivable” without watching humans react.

Watching the Rewrites

For two years, lawyers have used general-purpose AI tools to refine clauses, summarize depositions, tighten language, and explain regulatory complexity.

Often under enterprise agreements that restrict the use of proprietary documents for training. There is no public evidence of systematic violation of those boundaries.

The leverage doesn’t require misconduct.

Even when raw documents are excluded from training, platforms can observe patterns:

Which task categories dominate usage.

Where users request revisions.

Which workflows drive repeat engagement.

What correlates with paid tiers.

They don’t need your specific contract.

They need to see that contract review is sticky.

When thousands of professionals repeatedly adjust outputs — shorter, firmer, less ambiguous — the model improves its approximation of that refinement pattern.

Legal text teaches structure.

Human edits teach posture.

Watson Tried to Write the Script

IBM tried to build vertical expertise the old way with IBM Watson after its win on Jeopardy!.

Watson hired consultants. Curated datasets. Tried to inject domain knowledge top-down.

It tried to script the courtroom.

Foundation models did something simpler.

They built the stage.

Let professionals walk onto it.

And watched which arguments kept happening.

Watson tried to manufacture judgment.

Modern platforms observed it.

If Your Moat Is Busywork, You’re Toast

Here’s the part no one wants to say plainly.

If all you are is a ChatGPT wrapper —

if your moat is prompt templates, workflow glue, and polished busywork —

you are rehearsal.

Platforms optimize around repetition. If thousands of lawyers are using a general-purpose model to review contracts and refine clauses, the abstraction layer collapses. The feature moves down-stack.

This isn’t theft.

It’s gravity.

The only defensible moat isn’t formatting.

It’s judgment.

Judgment under uncertainty.

Judgment under liability.

Judgment shaped by experience, not syntax.

AI can approximate tone.

It cannot assume risk.

It cannot sign the document.

It cannot stand in court.

It cannot absorb the reputational blast radius.

“Human in the loop” isn’t a safety blanket.

It’s the only thing the loop can’t eat.

The Dome Is Still There

In The Truman Show, nothing explodes when Truman asks, “Who you talkin’ to?”

The streets still function.

The neighbors still wave.

The sky stays blue.

But he understands something new.

The conversation has another audience.

Foundation models moving into legal isn’t dystopia.

The clauses still draft.

The efficiencies are real.

The value is tangible.

But the reasoning engine sits above the workflow.

And once you see that, you start asking a different question:

When the platform speaks…

who is it speaking to?

In case I don’t see ya’…

Good afternoon.

Good evening.

And good night.